#Lorenz Driven Operationally Deterministic Ising Model

#Please cite me for credit if you are planning to use my code

#Operation:

# Only the initial state is randomized. all else (core functions) operates deterministically.

# Here we deviate from the traditional Ising Model which are probabilistic, stochastic in operation:

#X(t),Y(t),Z(t) are coupled through a system of continuous non-linear differential equations.

# raw Y(t) -> used to select sites.

# normalized X(t) -> used to define spin flip conditions.

# raw Z(t) + forcing -> equation where we add our forcing component (forcing = 0 if you want pattern formation to occur)

# The free parameters of the Lorenz equation(r,s,b) are material specific and temperature independent properties of the material

# which I aptly call "spin-sensivity parameters" of a material on which the experiment is being conducted.

# Model Motivation & Model Outcome:

#The goal here is to bridge the gap between theory and practice of solid state physics by abandoning random.random()

#for the internal operations of the Ising model.

#The Ising Model is an ontologically deterministic spin system, but we are limited in

#knowing the initial conditions due to finite precision from our measurement instruments. Different potential patterns that emerge vary.

#depending on perturbing our inputs which we simulate due to said limitations in the knowledge of the initial condition of

#the system.These are extrinsic limitations. In addition the model has built structural limitations that it acknowledges:

#computers have finite resolution and time steps despite the Lorenz model being innately continuous rather than discrete

#.So our model reflects this intrinsic limitation and should provide fruitful study for how extrinsic and intrinsic

#limitations interact with one another in limiting predictability of the exact patterns formed in experiment.

#the coupled equations in real life. The coupled nature of the equations in addition to this intrinsic limitation with delta #time and fidelity could also give the model more utility to the modeler over simplistic substitution of random.random with #one dimensional discrete chaotic map where time steps are discrete f: x(t) -> x(t+1) whereas my model can do outputs for t+0.001.

# The consequence being that different pattern formation appears "stochastic" due to limitations in knowledge of the initial conditions.

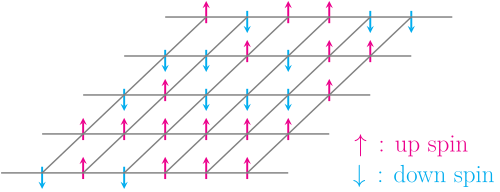

#At the same time we have a "know how" that some kind of pattern of spin 'up' and 'down' will eventually emerge due to #dropping temperature.

#when you add a forcing onto Z you are altering the geometry of the attractor and thus altering the effect of the spin-#sensitivity parameters on the spin of the material on it. You consider it akin to chemical or physical alteration of a #material. This means we may be able to study the Ising with time dependent forcing with reversible alterations of the material

#Potential Numerical Advantages:

# pre-packaged inputs would offer and speed and predictability in computation over random.random() operations.

import numpy as np

import matplotlib.pyplot as plt

from decimal import Decimal, getcontext, ROUND_HALF_UP

def lorenz_step(state, dt=0.01, s=15, r=23, b=2):

x, y, z = state

x_dot = s * (y - x)

y_dot = r * x - y - x * z

z_dot = x * y - b * z

new_state = np.array([x + x_dot * dt, y + y_dot * dt, z + z_dot * dt])

return new_state

class LorenzRNG:

def __init__(self, initial_state=(0.0, 1.0, 1.05), dt=0.01, buffer_size=1000, skip_transient=500):

self.state = np.array(initial_state)

self.dt = dt

self.x_buffer = []

self.transient_counter = 0

self.skip_transient = skip_transient

self.buffer_size = buffer_size

def next(self):

self.state = lorenz_step(self.state, self.dt)

x, y, _ = self.state

if self.transient_counter < self.skip_transient:

self.transient_counter += 1

return 0.5, y

self.x_buffer.append(x)

if len(self.x_buffer) > self.buffer_size:

self.x_buffer.pop(0)

x_min = min(self.x_buffer)

x_max = max(self.x_buffer)

if x_max != x_min:

x_norm = (x - x_min) / (x_max - x_min)

else:

x_norm = 0.5

x_norm = np.clip(x_norm, 0, 1)

return x_norm, y

XMAX, YMAX = 50, 50

N = XMAX * YMAX

def delta_energy(x, y, grid):

return grid[x, y] * (

grid[(x + 1) % XMAX, y] + grid[(x - 1) % XMAX, y] +

grid[x, (y + 1) % YMAX] + grid[x, (y - 1) % YMAX]

)

def switch_spin(x, y, grid):

grid[x, y] *= -1

def grid_with_finder(rows, columns, fidelity, target_num):

getcontext().prec = 28

getcontext().rounding = ROUND_HALF_UP

fidelity_decimal = Decimal(str(fidelity))

target_decimal = Decimal(str(target_num))

grid_max = rows * columns * fidelity_decimal

if grid_max != 0:

adjusted_target = (target_decimal % grid_max) if target_decimal >= 0 else (grid_max - (abs(target_decimal) % grid_max))

else:

adjusted_target = Decimal('0')

grid = []

current_value = Decimal('0')

for y in range(rows):

row = []

for x in range(columns):

wrapped_value = current_value % grid_max if grid_max != 0 else current_value

quantized_value = wrapped_value.quantize(fidelity_decimal)

row.append(quantized_value)

current_value += fidelity_decimal

if y % 2 == 1:

row.reverse()

grid.insert(0, row)

closest = None

min_diff = float('inf')

closest_x, closest_y = -1, -1

for y in range(rows):

for x in range(columns):

diff = abs(grid[y][x] - adjusted_target)

if diff < min_diff:

min_diff = diff

closest = grid[y][x]

closest_x, closest_y = x, y

return grid, closest, (closest_x, rows - 1 - closest_y), adjusted_target

def metropolis_step(grid, temperature, lorenz_rng, scaling_factor=0.153):

x_norm, raw_y = lorenz_rng.next()

_, _, (x, y), _ = grid_with_finder(XMAX, YMAX, scaling_factor, raw_y)

dE = delta_energy(x, y, grid)

if dE <= 0:

switch_spin(x, y, grid)

else:

if x_norm < np.exp(-2 * dE / temperature):

switch_spin(x, y, grid)

def temperature_schedule(step, total_steps, T_start, T_end):

return T_end + (T_start - T_end) * np.exp(-5 * step / total_steps)

def run_simulation_with_snapshots(T_start, T_end, snapshot_steps, lorenz_initial_state, scaling_factor=0.153, initial_grid=None):

lorenz_rng = LorenzRNG(initial_state=lorenz_initial_state, dt=0.01)

if initial_grid is None:

grid = np.random.choice([-1, 1], size=(XMAX, YMAX))

else:

grid = np.copy(initial_grid)

snapshots = {}

total_steps = max(snapshot_steps)

for step in range(1, total_steps + 1):

temperature = temperature_schedule(step, total_steps, T_start, T_end)

for _ in range(XMAX * YMAX):

metropolis_step(grid, temperature, lorenz_rng, scaling_factor=scaling_factor)

if step in snapshot_steps:

snapshots[step] = np.copy(grid)

return snapshots

np.random.seed(42)

initial_grid = np.random.choice([-1, 1], size=(XMAX, YMAX))

fixed_initial_condition = (0.15, 2.32, 6.04)

scaling_factors = [0.0001]

snapshot_steps = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

T_start = 100

T_end = 0.1

fig, axes = plt.subplots(len(scaling_factors), len(snapshot_steps), figsize=(16, 3 * len(scaling_factors)))

for i, scale in enumerate(scaling_factors):

snapshots = run_simulation_with_snapshots(

T_start, T_end, snapshot_steps, fixed_initial_condition,

scaling_factor=scale,

initial_grid=initial_grid

)

for j, step in enumerate(snapshot_steps):

ax = axes[i, j] if len(scaling_factors) > 1 else axes[j]

ax.imshow(snapshots[step], cmap='gray', interpolation='nearest')

ax.set_title(f"Step {step}")

ax.axis('off')

plt.suptitle(f"Lorenz Driven Operationally Deterministic Ising Model. Scale: {scale}", fontsize=18)

plt.tight_layout(rect=[0, 0, 1, 0.96])

plt.show()